Intel says Keem Bay VPU offers 10 times the AI performance of the Myriad X

Nov 13, 2019 — by Eric Brown 2,557 views

Intel announced a third-gen VPU code-named “Keem Bay” that will offer 10 times the AI performance as its Myriad X chip. It’s claimed to be equivalent to a Jetson Xavier AGX, but with up to 4.7 times more power efficiency.

At Intel’s AI Summit held yesterday in San Francisco, the chipmaker announced a third-generation version of its Movidius Myriad AI technology. Due to arrive in the first half of 2020 in M.2 and PCIe modules, the “Keem Bay” chip will provide 10 times the inference performance of the 1-TOPS Myriad X vision processing unit (VPU), which is found on Intel’s Neural Compute Stick 2. This would suggest 10-TOPS performance.

Myriad X also powers Aaeon’s AI Core X modules and IEI’s Mustang modules, including the Mustang-MPCIE-MX2 mini-PCIe card. The VPU is also used by Luxonis’ upcoming DepthAI Raspberry Pi HAT.

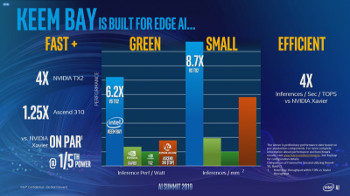

Keem Bay slide from Intel’s AI Summit

(click image to enlarge)

Source: VentureBeat

Intel further claims the Keem Bay VPU will be four times faster at inference than Nvidia’s Jetson TX2 while only drawing between a third to a fifth of the power, according to VentureBeat. In addition, Keem Bay delivers “four times the inferences per second per TOPS” versus Nvidia’s high-end, up to 30-TOPS (trillions of operations per second) Jetson Xavier AGX. Nvidia recently announced a scaled down version called the Jetson Xavier NX with 14 TOPS (10W) or 21 TOPS (15W) AI performance.

— ADVERTISEMENT —

Finally, Intel claimed that Keem Bay offers 1.25 times faster inference performance than Huawei’s up to 16-TOPS (at 8W) HiSilicon Ascend 310 AI accelerator. Such comparisons are muddied by the different power envelopes, as well as the fact that like the Ascend 310, the Myriad X is an AI co-processor while the Jetson modules run AI algorithms on GPUs that work closely with onboard CPU cores. A CNXSoft post speculates that Keem Bay consumes around 6 Watts.

Nervana NNP-T |

Intel also demonstrated its previously announced Intel Nervana Neural Network Processors (NNP) for training (NNP-T1000) and inference (NNP-I1000). Aimed at cloud and datacenter applications, the NNP modules are currently rolling out to customers.

In addition, Intel announced Edge AI DevCloud, also referred to as Intel DevCloud for the Edge. Working together with the Intel OpenVINO toolkit, the platform allows developers to try, prototype, and test AI solutions on a broad range of Intel processors before they buy. Intel expects its AI solutions will generate more than $3.5 billion in revenue in 2019.

Further information

The Keem Bay VPU will be available in the first half of 2020. More information may be found in Intel’s Keem Bay announcement.

Please comment here...