Aaeon launches M.2 and mini-PCIe based AI accelerators using low-power Kneron NPU

Oct 16, 2019 — by Eric Brown 1,869 views Aaeon’s M.2 and mini-PCIe “AI Edge Computing Modules” are based on Kneron’s energy-efficient, dual Cortex-M4-enabled KL520 AI SoC, which offers 0.3 TOP NPU performance on only half a Watt.

Aaeon’s M.2 and mini-PCIe “AI Edge Computing Modules” are based on Kneron’s energy-efficient, dual Cortex-M4-enabled KL520 AI SoC, which offers 0.3 TOP NPU performance on only half a Watt.

Aaeon took an early interest in edge AI acceleration with Arm-based Nvidia Jetson TX2 based computers such as the Boxer-8170AI. More recently, it has been delivering M.2 and mini-PCIe form-factor AI Core accessories for its Boxer computers and UP boards equipped with Intel Movidius Myriad 2 and Myriad X Vision Processing Units (VPUs). Now, it has added another approach to AI acceleration by launching a line of M.2 and mini-PCIe AI acceleration cards built around Kneron’s new KL520 AI SoC.

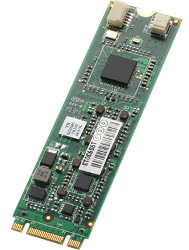

M2AI-2280-520 (left) and Mini-AI-520

(click images to enlarge)

Aaeon is taking orders for three KL520-based AI Edge Computing Modules cards aimed at IoT, smart home, security, and mobile devices:

- M2AI-2280-520 — M.2 B-Key 2280

- M2AI-2242-520 — M.2 2242

- Mini-AI-520 — mini-PCIe

Aaeon’s 0 to 70°C tolerant AI Edge Computing Modules operate at 0.5W to 0.9W. There do not appear to be any functional differences between the three modules, which all supply UART and JTAG debug interfaces and communicate with the host processor via USB signals. The modules support acceleration for ONNX, TensorFlow, Keras, Caffe frameworks with models including Vgg16, Resnet, GoogleNet, YOLO, Tiny YOLO, Lenet, MobileNet, and DenseNet.

M2AI-2242-520 with front and back detail views

(click images to enlarge)

The KL520 AI SoC combines dual Cortex-M4 MCUs with Kneron’s Neural Processing Unit (NPU) chip, which can be licensed separately. The power-efficient KL520 supports co-processor use, as deployed in Aaeon’s AI Edge Computing Modules, in scenarios that would typically connect to an embedded Linux computer. The SoC can also be used as a standalone, AI-enabled IoT node in applications such as smart door locks.

— ADVERTISEMENT —

The KL520 AI SoC is designed to accelerate general AI models such as facial and object recognition, gesture detection, and driver behavior for AIoT applications including access control, automation, security, and surveillance. It can also be used to monitor consumer behavior in retail settings – a trend that could push even more customers to shop online. Aaeon notes, however, that the solution enhances privacy — and reduces latency — because edge AI devices do not require a cloud connection.

Aaeon AI Edge Computing Module block diagram (left) and KL520 AI SoC

(click images to enlarge)

The Kneron NPU within the KL520 supports higher than 1.5 TOP per Watt, claims San Diego based Kneron. The IP can easily operate at under 0.5W “and can reach as low as 5 mW for specific applications,” says the company.

According to an EETimes story last week that preceded Aaeon’s announcement, the KL520 AI SoC provides only 0.3 TOP AI acceleration performance at 0.5 Watts, or the equivalent to 0.6 TOP/W. By comparison, the Myriad X runs at 1 TOP, but presumably with higher power consumption. This gives Aaeon customers a choice of two levels of AI acceleration: the less powerful, but more power-efficient AI Edge Computing Modules and the faster, but more demanding Myriad X-enabled AI Core X modules.

Kneron’s 57MB facial recognition model running on its NPU has been recognized by NIST (National Institute of Standards and Technology) as the best performing model under 100MB. Systems integrator TIIS used the model as part of a security system for the public banks of Taiwan. EETimes quotes Kneron COO Adrian Ong as saying that for embedded applications, the model can be compressed down to 32MB or even 16MB.

Kneron’s Reconfigurable Artificial Neural Network (RANN) architecture working in conjunction with an efficient compiler “can dynamically adapt to various computing architectures based on different AI applications,” says Kneron. The NPU can run different convolutional neural networks (CNNs) based on different applications, “regardless of kernel size, architecture requirements or input size.” This results in “very high MAC (media access control) efficiency,” says the company.

The KL520’s compiler is touted for its proprietary compression technology, which together with RANN, enables ultra-small footprint AI acceleration for smart home and smartphone applications such as facial recognition. Finally, Kneron mentions that its technology is especially applicable to various 3D sensor technologies such as structured light, dual-camera, ToF, and Kneron’s own 3D sensing technology.

Aaeon is the first company to commercialize the KL520. Other partners listed in Kneron’s May 2019 KL520 announcement include Eltron, Himax, Alltek, Pegatron, Orbbec, and Datang Semiconductor. EETimes reported that a second-gen version of the Kneron NPU due for sampling in Q1 2020 “will be able to accelerate both CNNs and RNNs (recurrent neural networks), for vision and audio applications.”

Further information

Aaeon’s AI Edge Computing Modules featuring the KL520 AI SoC are available for order at an undisclosed price. Presumably, these will eventually be available as individually price accessories for UP boards and the like. More information may be found in Aaeon’s announcement and on its preliminary product pages for the M2AI-2280-520, M2AI-2242-520, and Mini-AI-520 modules. More on the KL520 AI SoC may be found on Kneron’s website.

Please comment here...