Movidius AI acceleration technology comes to a mini-PCIe card

Mar 6, 2018 — by Eric Brown 5,278 views Aaeon has launched the “UP AI Core” — a $69 mini-PCIe version of Intel’s Movidius Neural Compute Stick for neural network acceleration that’s designed to work with the UP Squared SBC and other Ubuntu-driven x86_64 computers.

Aaeon has launched the “UP AI Core” — a $69 mini-PCIe version of Intel’s Movidius Neural Compute Stick for neural network acceleration that’s designed to work with the UP Squared SBC and other Ubuntu-driven x86_64 computers.

As promised last week by Intel when it announced an Intel AI: In Production program for its USB stick form factor Movidius Neural Compute Stick, Aaeon has launched a mini-PCIe version of the device called the UP AI Core. It similarly integrates Intel’s AI-infused Myriad 2 Vision Processing Unit (VPU). The mini-PCIe connection should provide a faster response times for neural networking and machine vision compared to connecting to a cloud-based service.

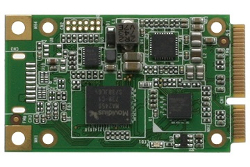

UP AI Core, front and back

(click images to enlarge)

The module, which is available for pre-order at $69 for delivery in April, is designed to “enhance industrial IoT edge devices with hardware accelerated deep learning and enhanced machine vision functionality,” says Aaeon. It can also enable “object recognition in products such as drones, high-end virtual reality headsets, robotics, smart home devices, smart cameras and video surveillance solutions.”

The UP AI Core is optimized for Aaeon’s Ubuntu-supported UP Squared hacker board, which runs on Intel’s Apollo Lake SoCs. However, it should work with any 64-bit x86 computer or SBC equipped with a mini-PCIe slot that runs Ubuntu 16.04. Host systems also require 1GB RAM and 4GB free storage. That presents plenty of options for PCs and embedded computers, although the UP Squared is currently the only x86-based community backed SBC equipped with a mini-PCIe slot.

UP Squared (left) and Myriad 2 architecture

(click images to enlarge)

Aaeon had few technical details about the module, except to say it ships with 512MB of DDR RAM, and offers ultra-low power consumption. The UP AI Core’s mini-PCIe interface likely provides a faster response time than the USB link used by Intel’s $79 Movidius Neural Compute Stick. Aaeon makes no claims to that effect, however, perhaps to avoid disparaging Intel’s Neural Compute Stick or other USB-based products that might emerge from the Intel AI: In Production program.

— ADVERTISEMENT —

Intel’s Movidius Neural Compute Stick |

It’s also possible the performance difference between the two products is negligible, especially compared with the difference between either local processing solutions vs. an Internet connection. Cloud-based connections for accessing neural networking services suffer from reduced latency, network bandwidth, reliability, and security, says Aaeon. The company recommends using the Linux-based SDK to “create and train your neural network in the cloud and then run it locally on AI Core.”

Performance issues aside, because a mini-PCIe module is usually embedded within computers, it provides more security than a USB stck. On the other hand, that same trait hinders ease of mobility. Unlike the UP AI Core, the Neural Compute Stick can run on an ARM-based Raspberry Pi, but only with the help of the Stretch desktop or an Ubuntu 16.04 VirtualBox instance.

In 2016, before it was acquired by Intel, Movidius launched its first local-processing version of the Myriad 2 VPU technology, called the Fathom. This Ubuntu-driven USB stick, which miniaturized the technology in the earlier Myriad 2 reference board, is essentially the same technology that re-emerged as Intel’s Movidius Neural Compute Stick.

UP AI Core, front and back

(click image to enlarge)

Neural network processors can significantly outperform traditional computing approaches in tasks like language comprehension, image recognition, and pattern detection. The vast majority of such processors — which are often repurposed GPUs — are designed to run on cloud servers.

AIY Vision Kit |

The Myriad 2 technology can translate deep learning frameworks like Caffe and TensorFlow into its own format for rapid prototyping. This is one reason why Google adopted the Myriad 2 technology for its recent AIY Vision Kit for the Raspberry Pi Zero W. The kit’s VisionBonnet pHAT board uses the same Movidius MA2450 chip that powers the UP AI Core. On the VisionBonnet, the processor runs Google’s open source TensorFlow machine intelligence library for neural networking, enabling visual perception processing at up to 30 frames per second.

Intel and Google aren’t alone in their desire to bring AI acceleration to the edge. Huawei released a Kirin 970 SoC for its Mate 10 Pro phone that provides a neural processing coprocessor, and Qualcomm followed up with a Snapdragon 845 SoC with its own neural accelerator. The Snapdragon 845 will soon appear on the Samsung Galaxy S9, among other phones, and will also be heading for some high-end embedded devices.

Last month, Arm unveiled two new Project Trillium AI chip designs intended for use as mobile and embedded coprocessors. Available now is Arm’s second-gen Object Detection (OD) Processor for optimizing visual processing and people/object detection. Due this summer is a Machine Learning (ML) Processor, which will accelerate AI applications including machine translation and face recognition.

Further information

The UP AI Core is available for pre-order at $69 for delivery in late April. More information may be found at Aaeon’s UP AI Core announcement and its UP Community UP AI Edge page for the UP AI Core.

Please comment here...